Interaction with smart glasses

In a previous post we talked about some interaction methods available for the smart glasses existing on the market today, such as voice and touch, and presented our vision for interaction in the future. But how does current research approach these interactability problems?

Touch interaction with Google Glass

Touch interaction with Google Glass

The latest research shows that current interaction methods available are not optimal in relation to the devices that we use. Touch interaction with smart devices is tedious and repetitive while at the same time leaving the user to occlude their sight due to the constant need of touching a screen.

Concerning smart glasses, the user only has a spectacle frame of the glasses to touch, which is a tiring and un-ergonomic task. The downsides of touch interaction leave voice interaction the only other available choice when it comes to interaction with small smart devices such as smart glasses and watches. That is why almost all smart glasses today are primarily voice-controlled. But voice interaction is not always suitable when it comes to interaction with your device either. As we could see in our latest post, covering what characteristics users want in their glasses, voice interaction was not preferred by the users and in crowded or noisy environments other types of interaction methods have to be available.

This is no news in the area of smart glass interaction research and below we will look at the status of current research and their approach.

Research approaches to smart glass interaction

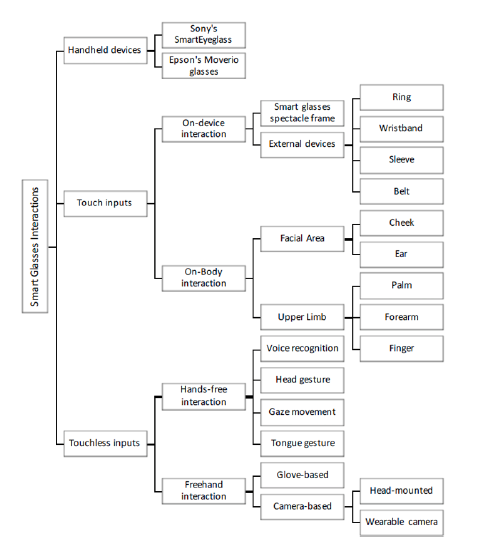

When looking at the research in the field of interaction with smart glasses, current fields of interest can be divided into three fields: handheld devices, touch input, and touchless input. These can be narrowed down further, where handheld devices refer to smartphones and trackpads that can be used to control the input to the smart glasses. Touch input refers to non-handheld touch input and can be divided into on-device interaction and on-body interaction. Touchless input refers to non-handheld and non-touch input and can be divided into hands-free interaction and freehand interaction.

Classification of interaction approaches by Lee, L. H., and Hui, P. (2018).

Classification of interaction approaches by Lee, L. H., and Hui, P. (2018).

We will take a closer look at the different areas, focusing on touch and touchless input due to their possibilities to become a standard interaction tool in the future, where handheld devices are something future interaction methods want to strive away from.

Touch input: On-device interaction

On-device interaction means the user can perform input on devices that are not handheld. These include the touch interface on smart glasses and physical forms of external devices such as finger-worn devices, arm-worn devices, and touch-belt devices.

Touch interface on smart glasses

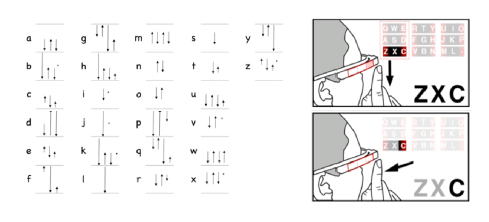

When researchers propose how to solve the issue of writing on google glass, they have looked at different swipe-based gesture interaction systems on the spectacle frame of the smart glasses. One suggestion has been a uni-stroke system, where every character is made up of a combination of two-dimensional, easy to remember, strokes that mimic handwriting. The system is represented to the left in the picture below.

Two different proposals of text input on google glass. From Lee, L. H., and Hui, P. (2018).

Two different proposals of text input on google glass. From Lee, L. H., and Hui, P. (2018).

Another swipe-based interaction system suggested has divided the touchpad of the spectacle frame into three zones; back, middle and front. This is illustrated on the right in the image above. Every character can be chosen through two swipes on the frame, the first selecting a character block containing three characters and the second selecting the target character inside that block.

Finger-worn, arm-worn and touch-belt devices

Finger devices has gained a lot of attention in recent years due to their support of small, discrete and single-handed movements for interaction. The rings either support the recognition of stroke based movements of the fingers on any surface or provides a touch surface directly on the ring. Some rings even support the recognition of the bending of the finger, which enriches the gesture interaction.

Arm-worn devices have a larger surface to interact with than finger-worn devices. These use sensors to capture arm movements, muscle tensions or enable gesture interactions directly on the wristband through strokes and taps.

Touch-belt solutions propose to move the stroke-based interactions of the spectacle frame of the glasses to the belt of the user instead. They argue that this kind of interaction is more ergonomically adapted because the user no longer has to lift their arm to interact with the glasses. Small and discrete interactions through the belt are all that is needed.

The advantages of an external non-handheld device for interaction is their precise spatial mapping between the interface of the glasses and the device, which leads to fast, accurate and repetitive input. The downside is the device itself, the need for an additional external device that has to be worn.

Touch input: On-body interaction

On-body interaction includes palm, forearm, finger, face, and ear as a surface for interaction and has the benefit of using natural tactile feedback that can lead to eyes-free input for the user.

Palm, forearm and finger as surface

There are several different approaches to using the palm or forearm as a surface for interaction. Solutions range from optical depth sensors detecting touching on the skin to vibration sensors detecting wave propagations along the arm’s skeletal structure when a finger press on the skin.

PalmType - a palm based keyboard for text entry. From Lee, L. H., and Hui, P. (2018).

PalmType - a palm based keyboard for text entry. From Lee, L. H., and Hui, P. (2018).

Some use projections of visual content onto the palm or forearm to visualize the interactable content, while others use the display on the smart glasses. The interaction can also be completely based tactile feedback, where i.e. the forearm is more used as a trackpad and the user rubs across the arm. Research has shown that tactile feedback is the most important factor when it comes to performing on-body interaction and it is where the success of the method lies.

iSkin is a mature prototype that consists of a thin flexible overlay that can be placed on the user’s skin on almost any part of the body to interact through. It enables the user to receive that performance-enhancing tactile feedback and is customizable to fit the user’s preferences.

Finger as surface differs from palm as surface, since the fingers are in focus and the palm is excluded in the interactions. These types of interactions draw advantage of the great accuracy humans experience in moving fingers in relation to each other. The interactions can be made discretely and seamless. To perform finger interactions a magnetic sensor positioned on the nail has been used. iSkin can also be used on the finger, face, and ear.

Face and ear as surface

The motivation behind using the face or ear as a surface for interactions is that we already touch our face approximately 16 times per hour and touching of ones face is therefore already a socially acceptable action. Another benefit is that the face provides a big enough space for different kinds of interactions to be performed easily. When it comes to the ear this surface is smaller and interactions can not be performed to the same extent there. However, the task of interacting with ones face or ear is as prone to fatigue as interactions with the spectacle frame of the smart glasses.

The largest benefit of on-body interaction is tactile feedback. The feedback leads to higher performance since the user does not have to rely on visual feedback alone and is already calibrated in our bodies.

The users also have the benefit of completely immersing themselves in the augmented world, since they don’t have to switch their attention between the visual content and the input interface.

The downside is that not all areas on or body are large enough for intuitive and easy interactions and those who are may be less socially accepted to interact through.

Touchless input: Hands-free interaction

Hands-free interactions can be made by movements of the head, tongue, and gaze as well as through voice recognition.

Head movements rely on a gyroscope and accelerometer in the smart glasses and are sensitive to movements in the up-down as well as right-left directions. Head movements can be used as authentication, game controller and text input. Although, head movements are not ergonomically usable for a greater time-period and cannot be used as a sole interaction method.

The ability to interact through tounge movements has originally been developed for paralyzed individuals, but the technology has lately been investigated in relation to Augmented Reality interaction. Proposed methods include either intrusive or non-intrusive sensor placement. The intrusive solutions place infrared optical sensors inside the mouth or on the user’s chins to measure muscle movements of the tongue. The non-intrusive solutions use wireless signals to detect different facial gestures driven by the cheeks.

Solutions relying on tongue movement have an accuracy of 90-94% which is very promising, although they only support up to eight different gesture combinations and lacks considerations for a more complicated interface.

Interaction through gaze movement has major advantages as it can act as a mouse pointer and in combination with hand-gestures may select, manipulate and rotate virtual objects. It utilizes our normal eye to hand coordination where we always will look at an object we wish to interact with and grab it to manipulate it. On the downside, the eye-tracking equipment needs to be calibrated before usage and is error-prone. Besides, there is no available smart glass hardware with built-in eye-tracking available on the market today, in contrast to virtual reality headsets. Although, this will be the nest step in the development of AR equipment and as soon as the price of the technology will drop we will start finding them in our smart glasses.

Until then, we will find voice recognition to be the most commonly used interaction tool in smart glasses. Although its top performance compared to other interaction methods on the market so far, it has a lot of limitations including its bad performance in noisy environments that might lead to accidental activation, disturbance or obtrusion. It also has disadvantages to mute individuals and is generally less preferred compared to other input methods.

Touchless input: Freehand interaction

Freehand interaction focuses on mid-air hand movements for gestural input and can be supported by a glove or a camera. Gloves with sensors can support pointing tasks or text input while the gloves hand and finger gesture and position. When using a camera, RGB, depth cameras, infrared, thermal and so on have been investigated for its purpose. These are supported by image processing, tracking and gesture recognition.

Recent research on gestural input has focused on enhancing social acceptance of gesture input and on designing a resting posture for long term use of hand gestures.

The interaction through gestural input is often preferred compared to touch input, especially in interactive environments. Although, when compared with mouse and touch interaction these outperform hand gestures for fast and repetitive tasks. The software handling the gesture-recognition is also consuming and erroneous leading to slow interactions.

Conclusion

None of the above-described interaction techniques have revloutionized the area and several have not even reached the market, which shows precisely how hard it is to solve these interaction problems with a general solution compatible in all settings.

The conclusion that researchers draw from this is that the most suitable interaction method is based on what types of elements the user is to interact with. There are different interaction methods suitable for different tasks. 2D content is more suitable with precise interaction methods, such as on-device or on-body interaction, while 3D content is more suitable with hands-free or freehand interaction methods. Since smart glass content can be made up of a combination of 2D and 3D content the trend now is towards combining them to create interaction methods suitable for all types of modalities and situations.

In our view, the focus should be on scaling down the interactions needed as much as possible. Since there is no method for interaction that is applicable in all situations, the approach should lie on developing systems that may anticipate and understand the users’ needs and wants, to support the user throughout all activities during the entire day.

References:

Lee, L. H., & Hui, P. (2018). Interaction methods for smart glasses: A survey. IEEE Access, 6, 28712-28732.