The future ways of interaction

We interact with a myriad of things every day, both physical and digital. Interaction can be viewed as a conversation, with inputs and outputs leading to new inputs and outputs.

Up until today, most digital interaction is designed following the windows-icons-menus-pointers (WIMP) metaphor, where the device is the focus of the design. WIMP modeling assumes that the user has a lot of screen space, a pointing device, and that interaction with the digital content is the users’ primary goal. This assumption does not follow today’s use-cases for wearable computers, such as smart glasses or watches.

“Interaction design is about behavior, and behavior is much harder to understand than appearance.” - Dan Saffer, author of Designing for Interaction

With wearables, interaction with the real world is the primary goal, which means all interaction design is contextual and is only relevant when it comes to serving its purpose for its time and context. In addition, the devices often have very small screens and come without any possibilities to use a pointing device for input. These kinds of devices are often used on the run, making additional equipment unsuitable and excessive.

The described conditions have created a need for a completely new way of interacting with our devices. Those who are less eager or able to invent new ways of interaction simply couple the wearables to the users’ smartphone, as described in our previous post. This means adding additional devices instead of replacing existing ones, which is one big benefit that smart glasses has the potential of doing. The goal must be to be able to make all interactions through our wearables, and there are some approaches for this.

Gestures and voice interactions

So far, most designers developing interactions for AR and smart glasses have developed gesture and/or voice control for their devices. But the technologies behind those types of interactions are not problem free. Gestures have to be captured by a camera and recognized by an algorithm, which has proven hard to accomplish, leaving quite a limited set of distinguishable gestures that can be used. Gestures are also often coupled with a pointer, centered in the screen of the glasses, making unnatural movements of the head a necessary evil. Another issue related to gesture input to smart devices that has to be solved is how the software will distinguish whether the user is trying to interact with the glasses or rather is involved in a conversation with another person since gesturing is a normal part of conversational interaction.

Gesture interaction with HoloLens 2

Gesture interaction with HoloLens 2

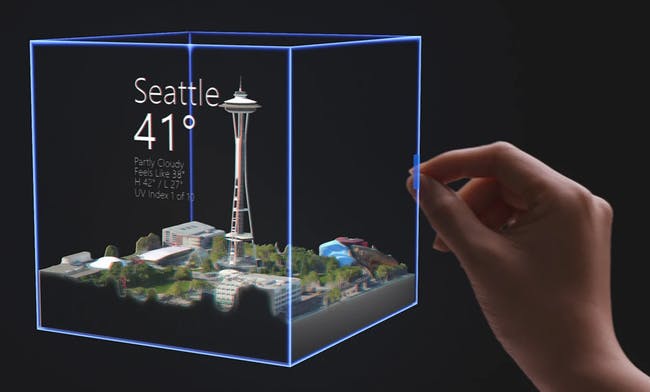

The newly released Microsoft HoloLens 2 has come one step closer to solving some of the issues regarding gestural interaction. The glasses are equipped with eye trackers, making them able to know what you are focusing on and thereby enabling a lot more user-friendly interaction. They have also enabled two-handed interactions with holograms, which was previously not supported, adding to the intuitiveness of interactions.

“HoloLens 2 enables direct manipulation of holograms with the same instinctual interactions you’d use with physical objects in the real world” - Julia White, Corporate Vice President, Microsoft Azure

Voice-commands are more flexible in their interactions than gestures. We have already become acquainted with assistants such as Google assistant, Alexa, Cortana and Siri integrated into our smart devices. Although, voice commands meet other problems. In noisy environments, voice-commands can be hard to decipher by the software. Voice-commands also meet the issue where it has to be distinguished from natural conversation. So far, this has been solved by starting any interaction with a device by calling it by name, such as “Hey Cortana/Alexa”. But there may be several situations where voice-commands are unsuitable and not all users feel comfortable talking to their devices.

Trying to solve the above-mentioned problems with gesture and voice interactions, some smart glass developers have added other ways of interacting with their glasses. Such an example can be seen with the glasses Focals by North. The Focals come with what they call a loop, a small joystick-ring enabling you to control your glasses in cases where voice interactions are unsuitable. The glasses are equipped with the assistant Alexa and the loop, which enables the user to choose their preferred way of interaction.

Focals by North

Focals by North

For warehouses, Superhumans have solved this issue by using a finger scanner for all interactions with the glasses. This is an easy way of interacting with digital content in a warehouse, where scanners are already used in most work-situations, but it is not scalable outside this setting.

Looking at the above-described ways of interacting with smart devices, it’s evident there still is some way to go before interaction with wearables are completely natural and intuitive. To reach the level needed for non-obtrusive and distracting interactions, a whole new way of thinking of and designing for interaction is needed, where the system has to be situation aware at a level close to the level of human sensitivity in human to human communication.

Situational Interaction

A compelling suggestion on how to create this kind of sensitive and situational interaction has been made by Pederson and colleagues (2011). Their model is based on the notion that human perception and action are inseparable but limited, and all interaction, therefore, should be based on the users’ perception and action possibilities. With that background, they propose the development and usage of a system that continually collects information regarding the user situation, state, and wishes. The information collected would be used by the system to decide how and when to raise an issue to the user, all based on discretely detectable cues from body language, pose, and dialog.

Part of their proposed system will be driven autonomously, which is what makes the system revolutionary in contrast to the models used today. By gathering information from sensors and devices distributed in the environment, the system will make conclusions regarding what information is relevant for the user in their particular state. The system will also be responsible for choosing a time and modality for interactions that are relevant for the user, to minimize the overall attentional costs for the user. This creates a whole new type of interaction, shifting from device-centered modeling, such as WIMP, towards body-centered modeling.

Clearly, to create an intuitive and easy way of interacting with smart glasses and other wearables, suitable for any situation, is far from easy to solve. But we believe in and look forward to a future where all devices are intelligent enough to know what you aim to do, when you want something and how to present it in a manner most appropriate in relation to what you are doing at the time.

References:

Pederson, T., Janlert, L. E., & Surie, D. (2011). a Situative Space model for mobile mixed-reality Computing. IEEE pervasive computing, 10(4), 73-83.

Saffer, D. (2010). Designing for interaction: creating innovative applications and devices. New Riders.